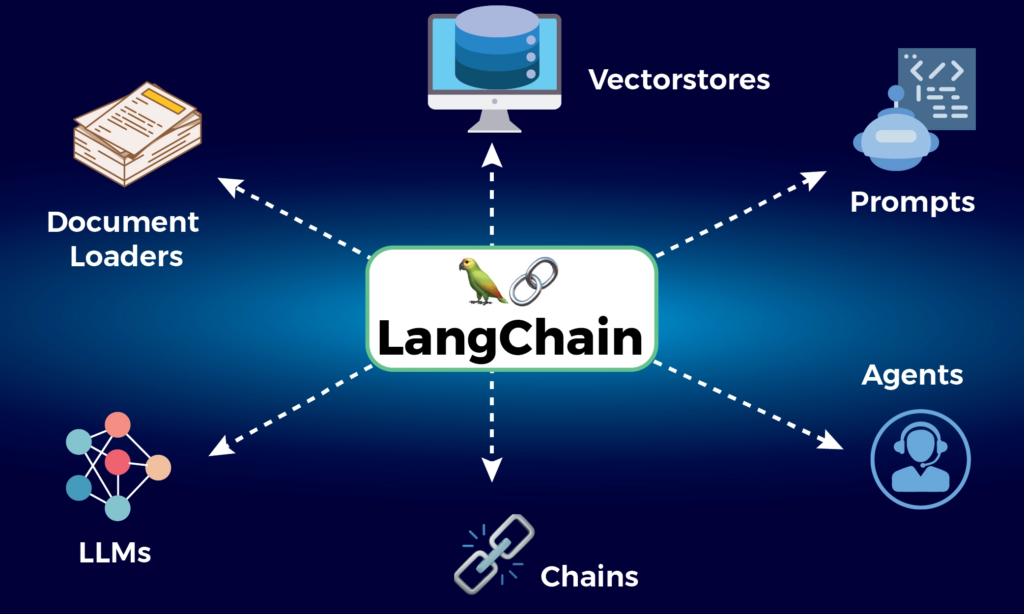

Recently integrated the langchain framework into our LLM-driven projects, encompassing LangChain Templates, LangServe, and LangSmith. Langchain facilitates seamless communication between users and LLMs through vector databases, connecting with leading providers like Hugging Face, OpenAI, and Amazon Bedrock. Its functionalities span from agents to Retrieval-Augmented Generation (RAG), enabling advanced actions beyond simple chat responses.

Despite challenges in text-triggered event implementation, langchain effectively overcame these hurdles. Retrieval augmented generation, a pivotal feature, enhances user interaction by delivering precise answers through semantic searches using vector embeddings. Additionally, we addressed a problem where user profiles were recommended based on skills, utilizing embeddings to bridge the gap between free-text queries and user-provided skills. The embedded profiles in our vector database were called using similarity metrics. Our end user had an LLM process all the required information to create an ideal profile, and embeddings similarity metrics sorted the recommended users.

Integrating langchain with our customized knowledge base on platforms like MongoDB and Amazon Bedrock significantly enhances user experience. Langchain’s versatility in working with various vector database types underscores its importance in our projects, facilitating smoother communication and advanced functionalities with LLMs.